Why We Built It

To move beyond simple "chatting with AI" and create an experience of actually "spending time" together, we designed a general-purpose dialogue foundation balancing responsiveness, extensibility, and personality expression.

We combine speech recognition, LLMs, 3DCG, and speech synthesis to deliver conversations that feel like the character is right in front of you.

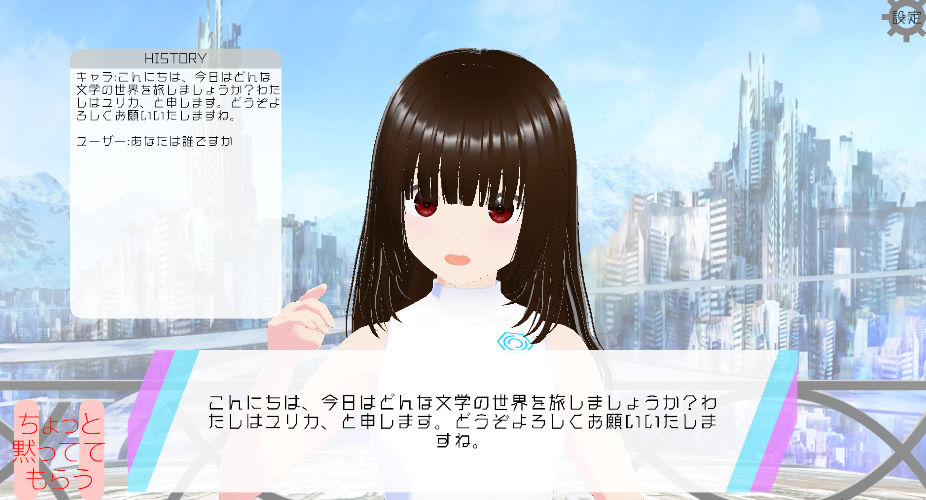

"With You" is a desktop app that lets you chat with AI characters at a natural pace. It puts immersion first so you feel the character is right there with you, tightly integrating language processing, 3D models, speech synthesis, and UI.

In the latest release the app can instantly generate a character's personality, appearance, and voice based on user requests, evolving from a single-character setup into a platform that can spawn countless personas.

To move beyond simple "chatting with AI" and create an experience of actually "spending time" together, we designed a general-purpose dialogue foundation balancing responsiveness, extensibility, and personality expression.

Experiences and technologies provided in the latest version of "With You."

Always-listening mode lets users interrupt even while the AI is talking, enabling casual back-and-forth and interjections at a natural tempo.

Built on OpenAI's Chat Completions API. Output strictly follows a JSON schema so conversation data and configurations remain structured.

Uses the local VOICEVOX HTTP API for streaming synthesis, minimizing latency before speech begins for a high-quality audio experience.

Natural-language prompts are converted into JSON covering tone, settings, appearance, and voice, then launched as a persona right away.

Hair color, eye color, and VRM models can be adjusted at runtime through material operations, with automatic color harmony adjustments.

Generation results are saved with PlayerPrefs so you can pick up conversations with the same personality after restarting.

Five core domains work closely together with Unity at the center.

Loads multiple VRM models at runtime, synchronizing blinking, mouth shapes, facial expressions, and body motions. Lip sync is driven by audio gain analysis.

Builds on Windows' DictationRecognizer to offer offline-ready, always-listening mode without requiring external APIs.

Receives LLM output in JSON and strictly manages conversation policy and context. Models are chosen according to usage scenarios.

Sequential synthesis via the VOICEVOX local API leverages GPU processing to speed up response start times and keep the flow natural.

Persists settings and history in PlayerPrefs so generated characters can be recalled and conversations resumed anytime.

Natural-language requests from the user are transformed into JSON that packages personality, appearance, and voice, then applied instantly. Schema validation filters out anomalies to keep generation stable.

Example: “Make her a kind, smart childhood-friend type.” Free-form instructions are welcome.

Outputs structured personality settings that follow a response_format=json_schema definition.

Loads the model, adjusts materials, and assigns the VOICEVOX speaker automatically so conversations can start immediately.

Saves the generated settings so the same personality and memories return when the app restarts.

Breaks free from rigid turn-taking with an always-listening architecture that lets users interrupt mid-sentence. Reactions can be inserted at a pace similar to talking with another person.

Analyzes response characteristics per model and accelerates replies with streaming synthesis and GPU generation, keeping conversations lively.

We're planning a "personal AI cloud" where generated character settings live online so you can talk to the same persona from phones or browsers.

The goal is a continuous personality experience where the AI feels alive even when the app is closed.

We imagine commercial and public spaces where tailored characters provide multilingual guidance as an interactive alternative to static signage.

By adapting to the situation, these characters can deliver the right information with a conversation rather than a static display.

What started as a hobby project now spans multiple technologies in pursuit of better human-AI conversations. Even with limited resources, we iterate fast and never stop evolving.

To keep pace with rapidly advancing generative AI, we constantly update our knowledge and chase richer dialogue and expression.